The AI Brief: Backing Enduring Companies in the Super-Cycle

Now software really is eating the world

Over the next five years, AI-native applications will replace many existing SaaS applications, automate or replace 20-50% of professional services, capture 10-40% of industrial processes, and gradually infuse fintech.

At Notion Capital, we focus on four categories, each of which is being transformed by AI.

Knowledge. AI-native software is reshaping and growing the existing $300bn SaaS market, turning the platforms for users to do do the work in into solutions that do the work for them.

Labour. AI is turning services into software, automating work once done by humans across the >$8tn professional services market, from consulting and law to accounting and market research. AI won’t enter the real economy without the new approach that helps to capture it.

Machines. AI that can interact with and learn from its physical environment will close real-world feedback loops, making industrial processes more efficient and tapping into a subset of the >$20tn global industrial output. This category includes embodied AI as well as other types of hardware-enabled software.

Money. Fintech isn’t software; it’s infrastructure, and thus beholden to different rules. But AI is already shaping aspects of how fintechs operate, and will make inroads into the global fintech TAM, which is expected to grow from $219bn in 2024 to more than $750bn in 2032.

The traditional Cloud and SaaS market is still growing by around 20% year-on-year. But the AI wave could be 10x bigger, because AI is fulfilling what SaaS always promised: to be the automation layer that replaces human labour.

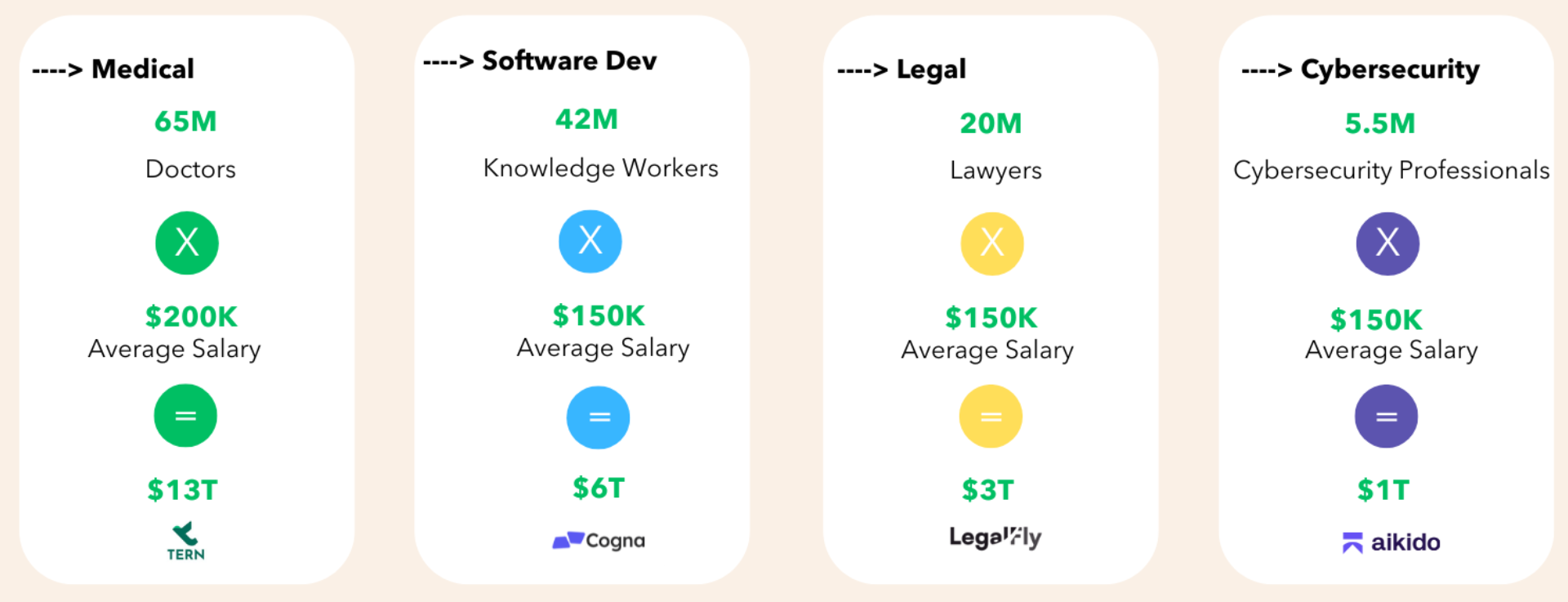

For this reason, we believe the TAM for AI tools won’t be measured by share of software budgets, but by the share of labour costs AI consumes and by the value of the outcome thereafter. In the medical market, as above, that’s $13 trillion, $6 trillion in software development, $3T in legal and $1T of professionals costs in cybersecurity. And, over the years, we’ll see a bigger and bigger share of that compensation going towards AI, rather than human labour. We believe AI will reshape how companies allocate resources, shifting budgets from repetitive human labor to intelligent software—ultimately creating workplaces where people focus on meaningful work.

The application layer as growth engine

We divide the AI market into three core categories: infrastructure (foundation models, compute, and hardware), middleware and the dev productivity layer, and the end application layer. We believe the last of these (the top layer below) is where the value will largely accrue.

AI usage is already high in the enterprise. In the US alone, 40% of employees are using AI at work, up from 20% in 2023. At the same time, enterprises are struggling to implement it top-down. Many enterprises built their early pilots directly on top of foundation models, and the results have been lacklustre. Over the past three years, CEOs say only 25% of AI initiatives have delivered expected ROI. But it hasn’t stopped the appetite. The biggest roadblock is no longer data risks, but finding the right use cases to pursue and having sufficient talent to develop and implement AI use cases.

Application-layer startups can do that lifting. Constant model updates, and performance and cost differences, demand continuous optimisation for different use cases – something third-party AI app companies are better equipped to handle than in-house teams.

This is already showing up in enterprise budgets. Spend is increasingly shifting from LLMs to specialised third-party applications, as we can see below.

AI is becoming embedded in how enterprises run, handling core and more complex workflows. In 2024, a quarter of enterprise LLM spending came from innovation budgets, according to a16z’s survey of 100 US enterprise CIOs. By May 2025, that dropped to just 7%, as enterprises increasingly paid for AI through centralised IT and business unit budgets. Similarly, 64% of AI budgets are now spent on core business functions, not pilots. This reallocation reflects a growing sophistication. Companies expect AI to handle more complex workflows: 58% of companies report that they are already deploying AI agents; another 35% are actively exploring potential use cases.

As AI enters into ever-more complex workflows, enterprises will increasingly demand applications that not only meet regulatory, security, and data governance requirements, but that they can interrogate, trace back to source data, and correct when things go wrong. Standards like ISO/IEC 42001 are setting the new baseline for enterprise AI adoption – and only a handful of companies worldwide have it today. Again, these concerns can be better handled by third-party applications, not model-based custom builds.

In other words, it is the end application layer that is converting AI into permanent line items, while LLMs cement their position in the infrastructure layer.

Railways, and those that ride them

This mirrors how cloud, and other revolutionary innovations before it, evolved. SaaS companies leveraged the cloud to build global businesses from day one, without the burden of computing and networking CAPEX or the need for deep internal cloud infrastructure expertise. In the same way, AI-native startups can leverage LLMs in the backend to deliver highly specialised solutions, without them or their customers building models themselves.

There is a lot of commentary on whether or not we are in an AI bubble. But, to paraphrase The Economist, bubbles come and go but that doesn’t stop the technologies that inflated them from sweeping the world. Railways. Electric light. The internet. All were accompanied by bubbles and all are here to stay.

And there’s a difference between the people who build the rails and those that run on them. Foundational models will continue to improve, but they will also become commoditised, as they all become good enough for most standard business applications – particularly when not every application needs the latest, most advanced model.

This allows application layer companies to achieve defensibility in ways that model builders cannot. While foundation models face commoditization pressure and massive capital requirements, startups that embed themselves in vertical workflows, accumulate proprietary data flywheel effects, and build trusted brands within their niche can create enduring moats. The closer you are to the end user and their specific workflow, the harder you are to displace.

AI is finally delivering on the SaaS promise

AI-native applications are now doing things that were impossible – and hugely valuable – for traditional software businesses just a few years ago.

Aikido uses agents to triage threats and remove 80% of the notifications that traditional cybersecurity software drowns you in. It then automatically writes the code to fix the vulnerability and push it to GitHub to be reviewed by a human. Today, 95% of the code that the agent suggests is accepted by engineers as good code.

Cogna generates production-ready software directly from human specifications, at 10% of the time and cost of traditional systems integrators. It replaces expensive teams of developers and external consultants, allowing any company to spin up custom software in weeks, not months.

Resistant AI catches fake, templated or AI-generated documents in seconds with AI-powered document fraud detection for the likes of TikTok, eBay, and Dun & Bradstreet, meaning more than 90% fewer manual reviews and decisions in under 20 seconds, freeing up human time.

These companies are rethinking entire workflows – and doing so in a thoughtful way: keeping humans in the loop to ensure high quality, but giving them their time back to focus on more valuable work.

Building a defensible AI application

But this is what everyone is building, and faster than ever before. Lovable hit $100 million ARR in eight months. Cursor went from ~$1 million to $100 million in 12 months, has now grown to $500 million in ARR, and is used by over half of the Fortune 500.

AI has changed the pace of scaling a business, but it hasn’t changed the fundamentals of building a lasting one. There are a lot of great companies that take time, particularly in enterprise. It took Figma seven years to reach $100m ARR. UIPath needed ten years to get to their first $1m ARR. Mews, founded in 2012, spent three years getting to first revenues and six to reach ~$1m ARR – when we invested. Seven years later, it is in excess of $400m ARR, growing fast and a true platform for its industry. If we only focus on the ARR momentum, we risk missing real breakthroughs in favour of businesses that may not be defensible and sustainable in the long term.

So, how do you build defensibility and endurance as an AI native founder, and what do we look for when we invest? For us, it comes down to four things.

Outstanding founder-market fit. These could be generalist technologists who’ve earned the right to lead in a category, or deep domain experts who know the problem space inside out.

Specialist, high-value, globally relevant markets. The company should be in a market that is large enough to create multi-billion outcomes, but niche enough to avoid heavy competition from big tech.

A compounding flywheel of defensibility. We look for companies that move fast, attract and retain their unfair share of talent, and build compounding defensibility in market insights, data, distribution, and market trust. The more specialised you are and the more specific industries you’re targeting, the more you can be a magnet for the best customers and great talent – even against the likes of Meta or OpenAI – and the harder it is for others to catch up.

ROI generative from day one. Obviously, we look for teams with momentum – it’s one of the biggest indicators of success in tech. But we’re also interested in the quality of that growth. We commonly see AI-native companies, especially in the SME segment, with a 70-85% yearly churn rate. Those wheels will come off. That’s why we pay a lot of attention to value creation and NRR: are existing customers seeing demonstrable value, expanding usage, adding seats, running more workflows? Positive NRR is the clearest signal that the product is solving a real problem and that you’re building a lasting business.

The new GTM playbook

Those traits give you a far greater chance of long-term success, but you still need to be able to design and execute a go-to-market strategy that works in the AI era. Many of the old playbooks no longer apply and B2B businesses are increasingly adopting consumer-style GTM strategies – viral, community-focused, and building where their users are – because AI gives them the levers to do so.

Upciti, which builds multi-purpose sensor technology to provide real-time data for cities, has turned city council meetings into a sales pipeline. In the US, most municipal, county, and state-level government meetings have to be open to the public, and many of these take place online, so anyone can dial in. Upciti sends an AI transcriber to every one of these meetings and uses that to enrich their CRM.

Aikido gained distribution by tapping into the vibe coding community emerging from the rise of Copilot, Cursor, Lovable, Windsurf, and Replit. Vibe coding democratised coding, but it also introduced what Aikido’s Developer Advocate, Mackenzie Jackson, calls “vulnerability-as-a-service”. AI doesn’t write secure code by default; it’s prone to common vulnerabilities like SQL injection, cross-site scripting, and path traversal attacks. So Aikido developed tools that secure AI-generated code and positioned itself as the default safety layer for this new way of working. Aikido will cement its position as vibe coding continues to enter the enterprise.

What makes these new GTM strategies possible is the existing scale and penetration of AI adoption.

At the height of the dot com boom in 2000, there were fewer than 20 million people with a high speed internet connection globally. In 2025, just two years into generative AI going mainstream, ChatGPT alone has 800 million weekly users, and there are around six billion users with some access to AI.

That level of distribution means that the ability to scale is easier, because there are more ready-made, sophisticated communities to tap into. Now, you build the community where the community already is, with self-service, prosumer products that can generate consumer-like virality.

The human super-cycle

Every major technology cycle has both disrupted and compounded what came before it – the PC, the internet, mobile, SaaS. AI is no different. But this time the impact is greater, because the best AI natives are rethinking how knowledge, labour, machines, and money work.

In short, AI is opening new markets and delivering the automation that SaaS always promised. That’s where we’re placing our bets.